What Is Phase?

Phase is the timing relationship between sound waves. And once sound waves turn into electrical signals, it's the time relationship between those electrical signals (represented digitally, in your DAW).

OK, that doesn't really help us. Let's think about it this way.

Sound is pressure waves, consisting of peaks and troughs of air pressure, traveling rapidly through the air. In a DAW, this is represented by a waveform in your recording project. Sometimes that waveform is above the zero line, and sometimes below.

Well, technically that signal has been digitized and turned into ones and zeroes. You can think of them as positive and negative numbers if you want to.

Now, what happens when a waveform with a level above the zero level meets another sound with the waveform below the zero level? The same thing that happens when you add a positive and negative number, or a high and low pressure part of a wave try to occupy the same space -- they cancel each other out, that's what!

If the opposing sound waves or signals are exact opposites, they cancel completely.

Understanding Phase

Complex Soundwaves

Different wavelengths of sound are traveling out from any sound source, bouncing off things, combining and mixing. Since these are high and low pressure waves, high pressure zones are mixing with low pressure zones and partially, or completely cancelling each other out. Other sound waves are being reinforced. This sounds natural to our ears.

Whether waves are reinforced or lessened depends on timing.

Wavelength & Frequency

Different frequencies of sound have different wavelengths. A wavelength is the distance it takes a soundwave to complete one complete cycle of high and low pressure. A low E on a guitar in standard tuning, has a wavelength of 13.657ft, or 4.163meters, at standard air temperature and pressure.

Frequency is measured in cycles/second, or Hertz (Hz). 1,000Hz is 1kHz.

The range of human hearing is about 20Hz - 20kHz. At 20kHz, the sound's wavelength is a little over 1/2" (.6752 inches). or 1.715 centimeters. At 20Hz, 17.5 meters, or 56'3".

So, you've got sound waves vibrating from 20 times/second, to 20,000 times/second. The wavelengths are from 56 feet, to 1/2". And that's just the sounds we can hear.

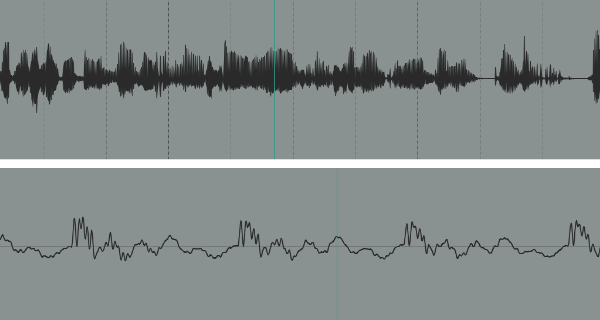

As you can imagine, the wave forms we capture in the DAW don't look like the simple sine waves you see above. They're complex. The look more like this . . .

Vocal waveforms, zoomed in and out

That's my voice. What you're seeing there are the interactions between various frequencies, picked up by the microphone. All sorts of phase interactions are happening. However, the phase interactions are not stable.

My voice isn't a sine wave. It's comprised of multiple frequencies that are constantly shifting. The volume shifts as well, and I move a little bit, even when I'm trying to stay a constant distance from the mic.

So, the phase relationships constantly shift. This is important. It's when we have stable phase relationships that trouble can occur. Stable phase relationships can happen when we have two or more microphones in a room, for instance. In this case, the mics might be picking up a very similar sound (stable) but the sound arrives at the mics at different times, because they're a different distance form the sound source.

This distance is also stable, since the mics and the sound source are stationary. So, any phase issues will be stable and sound unnatural. For instance, phase cancellation might cause a certain frequency and its harmonics to be cut drastically, while other frequencies are boosted.

This is called comb filtering.

The Comb Filtering effect as a result of phase issues.

Standing Waves

Another source of phase problems caused by stable phase relationships is standing waves. Have you ever noticed that recording studios try to avoid parallel surfaces where possible?

In a typical home, the walls are parallel and the ceiling is parallel to the floor. Windows are all straight up and down. But in a recoding studio, the glass in the control room is tilted. Where possible, walls aren't parallel, nor are the ceiling and floor.

This is because of standing waves.

If you blow across the top of an empty bottle, it makes a note. Blow across the top of a bigger bottle, and you get a lower note. This is because frequencies have different wavelengths. Since the bottle is symmetrical, some wavelengths bounce around it and are in phase, while others are out of phase.

The in-phase waves, which dominate the sound and last longer after you stop blowing, are called standing waves.

In many ways a recording studio will try to avoid parallel surfaces, so that standing waves don't build up. Think of it this way. If you have a parallel floor and ceiling, whatever frequency 'fits' that distance will be emphasized across the entire room. If you ceiling has a slight rise to it, different frequencies are emphasized in each place in the room. It's diffuse.

Standing Wave Build-up

What's the problem with standing waves? Well for one, every mic you put in a room that has significant standing waves, will pick up more of those standing wave frequencies. If you record 15 tracks in the same room, the buildup at those frequencies can be significant.

Fooling Your Ears

Secondly, standing waves can fool your ears. If you have a standing wave at 60Hz in the room where you mix, you're going to hear extra volume in that low-end area. You might think your kick drum is really thumpin', but when you take the mix out to you AMC Pacer to listen to it in the car, it sounds like corn flakes soaked in milk for too long.

Why Do We Care About Phase?

Phase issues sometimes arise when there are multiple microphones which are combined -- either immediately, or later, in a mix. Phase can make things sound thin, or add a weird character, sort of like a jet engine sound.

In some cases, phase cancellation can make a sound disappear, or nearly disappear. So, it's important to understand what causes phase issues, and how to solve them.

Mono Compatibility

- Phase issues can also be hard to detect in stereo, but might appear when a song is played in mono. Making sure things sound good in mono is called checking for mono compatibility.

- Mono, AM radio is not the important thing it was 30 years ago. But some people have mono Bluetooth speakers they listen to, a few phones still have mono speakers, and some club and arena PA systems are in mono.

If any of these is important to you, check your mixes in mono. Most DAWs have a mono button so you can check easily.

Just Using One Microphone At A Time?

I record with on mic at a time, with rare exceptions. I sometimes have an acoustic that I might mic, which also has a pickup I run direct (a pickup is essentially a microphone). Other than that it's all direct recording and virtual instruments. Even if I do multiple layers of background vocals, I do one track and one mic at a time. I only have one voice.

Since there aren't multiple mics in the room at one time, I don't have many instances in which I might have phase problems. If you're in my situation, you probably don't have phase problems either.

Stereo Mic Techniques

Sometimes we want to capture a sound in stereo, to get a sense of width in the recording. In this case, we need to out more than one mic in the room. That means phase problems are possible. How do we avoid or minimize them? Stereo microphone techniques.

Some of these stereo microphone techniques may be best with a matched pair of microphones. But if you have a couple of mics laying around, you can try them regardless.

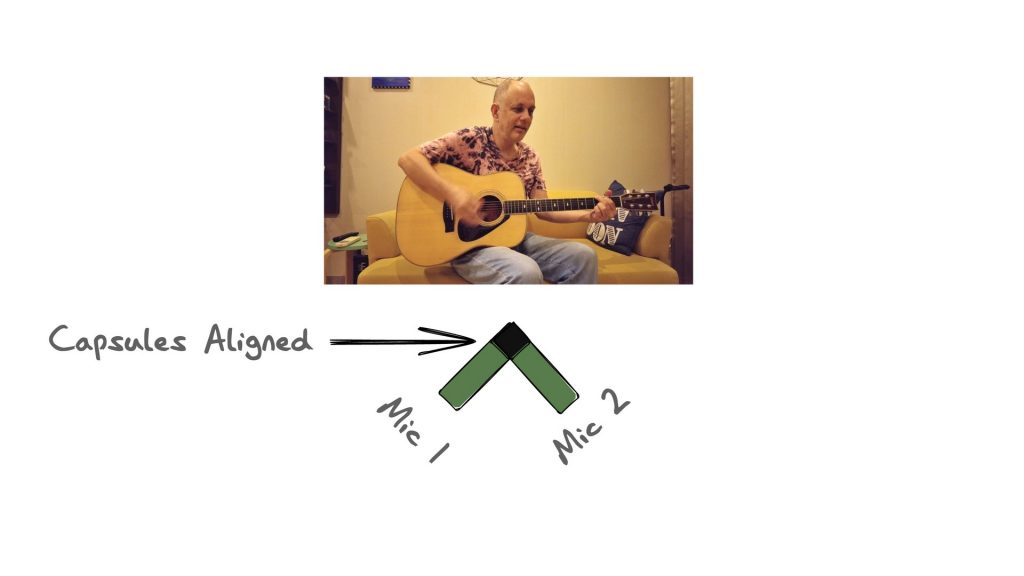

XY Technique

The XY stereo microphone technique puts the elements of two mics (usually a matched pair), as close to each other as possible, to minimize any phase problems. The backs of the mics are angled away from each other, usually at about 90 degrees.

XY offers a reasonable stereo field with excellent mono compatibility.

Why does the XY technique sound good in mono? The elements of the mics are the same distance from the sound source. So sound will arrive at both, at the same time, in phase.

ORTF Stereo

The ORTF stereo microphone technique was invented at the French broadcasting company, Office de Radiodiffusion Télévision Française. ORTF uses two cardioid microphones, with the capsules placed 17cm apart (6.7″) and angled at 110 degrees. with the butts toward each other. This method mimics human hearing and gives a wider stereo image compared to XY, without losing center information.

The mic elements are placed about the same distance apart as ears on a normal human (although I'm sure your bulbous head is bigger).

Compared to the XY, the technique has a wider stereo image, but is a bit less mono compatible (although still reasonable).

Spaced Pair

No, I'm not talking about Cheech and Chong. A spaced pair is just a couple of mics, usually placed 3-10 feet apart (about 1-3 meters), aimed at a sound source. Now your head probably isn't 3 meters wide. And your ears are probably on the sides of your head. So this technique can produce a much wider stereo image than you would hear naturally.

That might be what you need, or not.

But this technique is not particularly mono compatible. If you use a spaced pair and you want to sound good in mono, check in mono and experiment with changing the mic positions if you have problems.

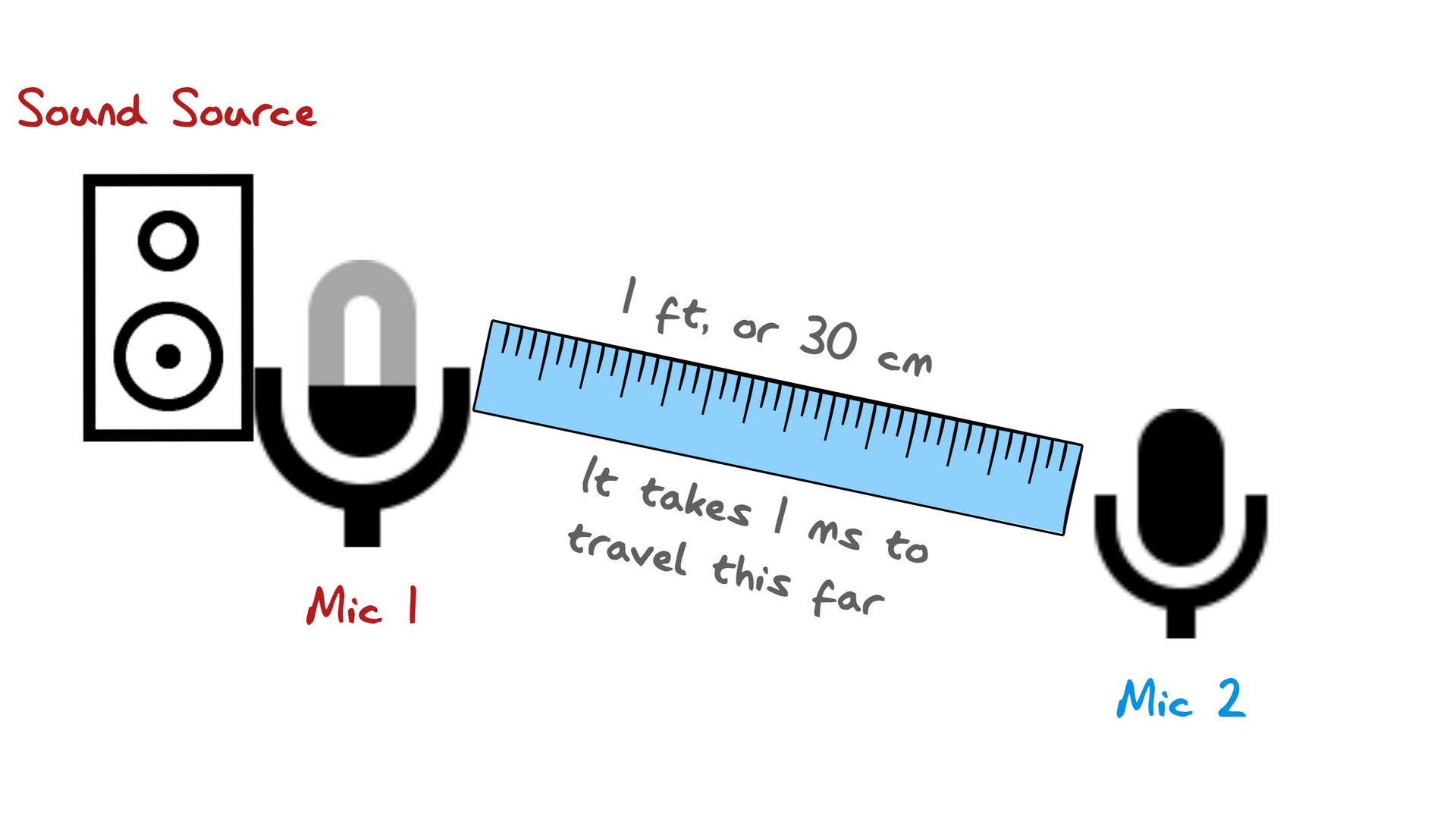

The 3:1 Rule

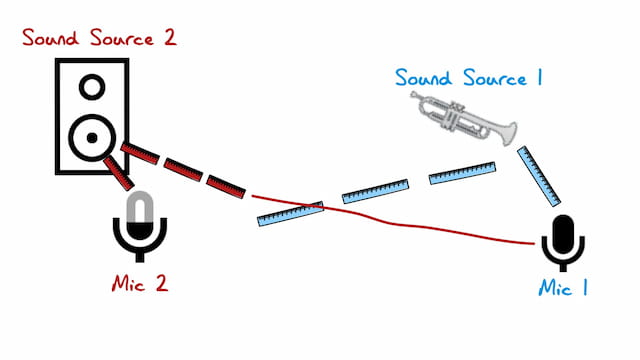

When you don't want to mic in stereo, but you still have multiple mics up at once, you'll want to py attention to the 3 to 1 rule. The 3-to-1 rule basically says you should keep microphones much closer to their sound sources than the other microphones. Three times closer, in fact.

So, if mic one is 1 foot from sound source 1, mic 2 should be at least 3 feet from sound source 1. And if mic 2 is 15 centimeters from sound source 2, mic 1 should be at least 45 centimeters away from sound source 2.

Basically, mics should be close to what they're supposed to pick up, and far away from other sound sources.

3 to 1 rule

Following this rule doesn't eliminate phase cancellation. It just brings it down in level. If you watched the video on phase, you know that in order to completely phase cancel a signal and make it disappear, you need sounds that are not only completely out of phase, but of equal level. Turn one sound down and the cancellation is less.

This method keeps the levels of the instruments down in the mics that aren't meant to record them. Thus, any phase problems are low level.

Other Potential Phase Issues

Parallel Processing

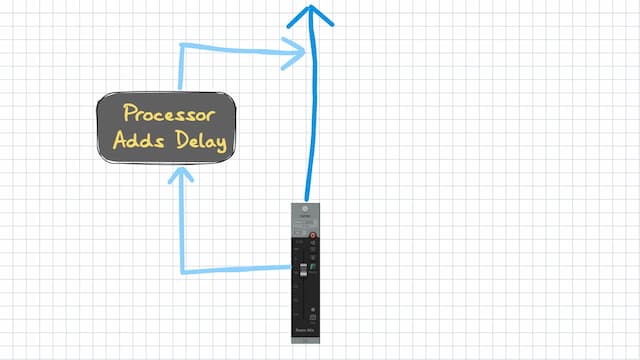

Your DAW should handle this internally, but just in case . . .

Parallel processing is when you split a signal. Some of the signal is left untouched, and some goes through an effect, such as compression, and then is combined back with the untreated signal. Well, it takes a bit of time to process the signal. So, potentially, when the signals are joined back together, you'll have phase issues.

But your recording software should calculate the time offset needed, and delay all of your tracks by that much, to get everything back in sync.

Outboard Gear

If you're using outboard gear in your session, and recording on a DAW, you might have to phase/time align stuff. In this case, you might be sending out to an actual hardware compressor and back into your DAW. You might need to compensate for the latency in this situation. After all, the signal has to go out of your DAW, get converted to analog, go through the compressor and out, get converted back to digital before it hits your DAW again. This takes time.

The procedures for compensating for roundtrip latency are different from DAW to DAW. You might have to check your manual on this one, folks.

Cable Wiring

Mic cables are typically XLR. They have one ground wire and two additional wires to carry what's called a balanced signal. XLR cables are pretty clever. They use phase reversal to eliminate noise.

The two signal carrying wires are out of phase. Then, at the end of the cable, the phase is flipped and the signals added back together. Why would you do that?

Well, any spurious noise such as radio interference will be picked up by both wires. When the phase is reversed at the end, the noise signals will be out of phase, and the musical signals back in phase. So, your noise gets canceled out. And that's one reason XLR cables can carry signals a lot further than unbalanced cables.

That's how it's supposed to work, anyway. However, if you've got a mic cable that has been wired backwards, you might run into phase problems. If you do, switch out the cable.

Drum Microphones & Phase

Some things are possible with modern DAWs, which were not possible back in the day, on magnetic tape. One of them is phase aligning drum microphones (or any microphones, really).

Let's face it. If you've got overhead mics on a drum kit, they're further away from the snare drum than the microphone that's sitting right on top of the snare. That means the snare sound is going to hit the overheads a fraction of a second later that it hits the snare mic. Thus, potential phase issues.

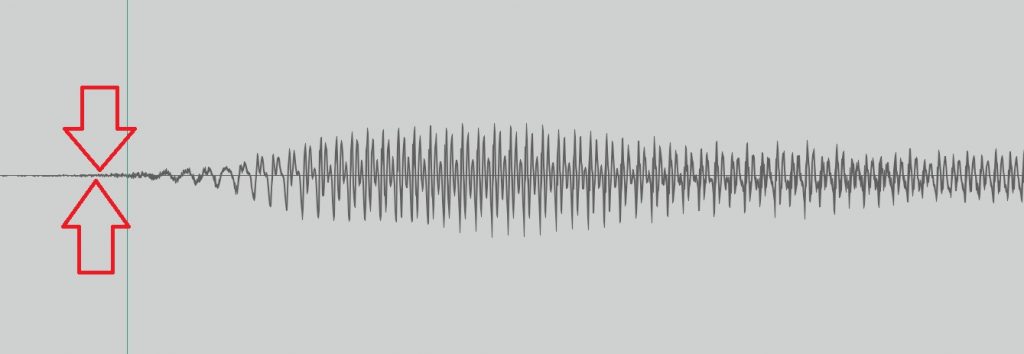

These days, you can phase align your microphones, in the DAW. Basically, it means you locate a transient (a sharp sound with an easy-to-spot beginning) for the same event on various tracks and line them up. You need to zoom all the way in to do this.

In other words, you take a snare hit on your overheads and a snare hit on your snare mic. You zoom in all the way and see the overhead mics are a wee bit behind the close mic. You move the overhead tracks forward in time, to match the snare hit exactly.

Some people find this can add punch to certain drums.

There are plugins that can help you with this sort of thing, as well, such as the Voxengo PHA-979,

Phase Rotation Plugin. It provides some metering to help you know when you're in phase.

Phase Reversal Switches

Phase reversal switches do what you might expect. The flip the phase on a signal. This can be useful to solve phase issues when you have multiple microphones in a room. It's just a 180 degree flip (phase can be measured in degrees, and 180 degrees is perfectly out of phase), so it's not going to solve every phase issue. But if you have a drum mic suddenly sounding thin, try flipping the phase back-and-forth on a couple of mics and see if anything improves.

Phase Meters

Correlation Meters

There are phase correlation meters, which are typically used to show the phase correlation between left and right channels. As a general rule, they should stay between 0 and +1. +1 is perfectly correlated, meaning the two sides are the same -- mono. 0 means a wide stereo spread, but mono compatible.

Dipping down into the negative numbers is ok, in short bursts. But if your meter spends significant time down there, your mix probable won't do well in mono.

A phase correlation meter on a mono source, showing +1

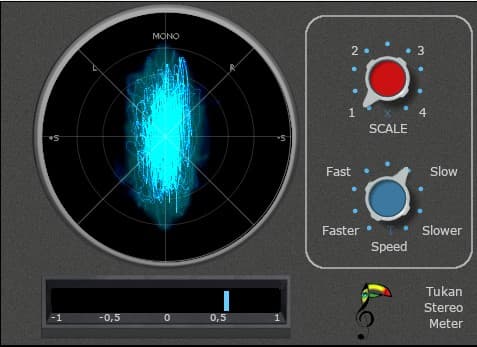

Goniometer

A goniometer (also called a phase scope, or vector scope) can give you some information about phase. It shows the width of your mix from side to side. A mono signal would show as a straight line.

As a general rule, in a stereo mix, you're looking for an oval shape, similar to the shape below. Too much wider and you might want to check that mix in mono. Too much narrower, and you need to find a way to add some width to your mix -- possibly by panning instruments more to the sides.

A Goniometer, with a correlation meter below

Resources

Voxengo Span: Voxengo Span is a free, useful frequency analyzer which includes a peak meter, and a correlation meter. Windows & Mac.

Voxengo PHA-979, Phase Rotation Plugin: For time aligning signals.

REAPER JS Plugins: Specific to REAPER, the REAPER Stash is where you can find themes, plugins, effects, meters and more, written in the JS language. The Reaper Blog has a great tutorial on how to install these REAPER-only plugins.

Tukan plugins (Reaper only): The Tukan Goniometer shown above is a free plugin for Reaper, in the JS language. Myk Robonson has a nice tutorial on how to install them.

Conclusion

So, that's phase as it relates to home music production for independent musicians.

Enjoy,

Keith